AI is creating fake historical photos, and that's a problem

The increasing sophistication of AI-generated photos raises concerns about the reliability of visual evidence

When I first started colorizing photos back in 2015, some of the reactions I got were, well, pretty intense. I remember people sending me these long, passionate emails, accusing me of falsifying and manipulating history. It was a fascinating reaction, and one that really caught me off guard.

At first, I couldn't help but wonder how some people could be so... clueless?! I mean, did they really think I was sitting there with a paintbrush applying gouache to the original negatives like some sort of deranged art forger? The idea was so ridiculous that it was almost funny, but mostly it just made me want to bang my head against a wall in frustration. Upon reflection, though, I started to understand why seeing photos suddenly come to life in vivid colors after knowing them only in black and white for so long was understandably unsettling for many. The process was new, so nobody really understood how it worked and what could be done with it; it challenged what people were used to; and it raised important questions about the ethics of modifying someone else's work (and, by the way, I only work with photos that are in the public domain, or that I have received permission to colorize, or that I have licensed - also known as paying for the rights to work on them).

In hindsight, I realize that this initial skepticism was not only natural but also healthy, and it made me truly understand the responsibility that I have.

“History is who we are and why we are the way we are.” - David McCullough

Throughout my career, I've always stuck to one key principle: a strong respect for historical accuracy and the integrity of the original photographs. Before adding any color to a picture, I dive deep into research mode. This involves endless hours of examining documents, consulting experts, seeking out modern reproductions, and scrutinizing every detail in each image. What was the exact shade of a World War II soldier's uniform? What color were the buildings on that particular street in the 1920s? By asking this kind of question and applying to the photos the answers that I find (which means reproducing the exact same colors - or getting as close to that as I can - that the photographer might have seen when pressing the shutter), I aim to prove that colorization, when done with a serious approach, can actually enhance our understanding of the past, making these moments in history feel more human, and more connected to our own lives.

Thankfully, as time went on and the technique became more refined and understood, the initial shock gave way to appreciation and curiosity. And it's been really cool to see this happening.

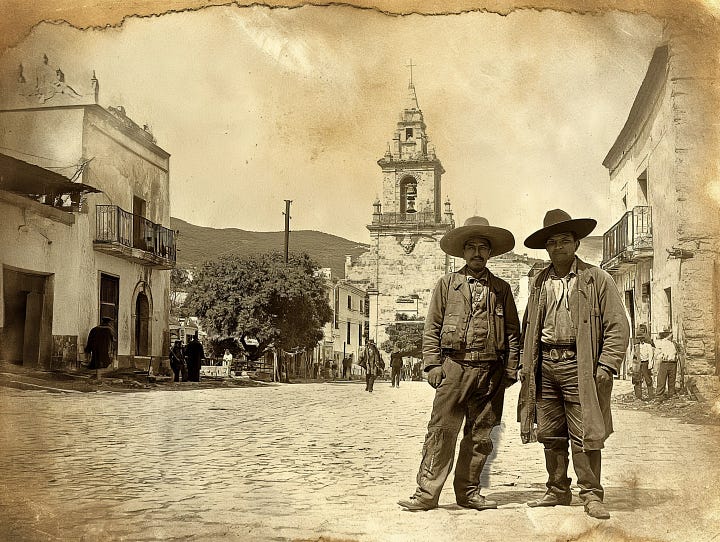

Anyway, fast forward to today, and we are facing a much more serious issue: the emergence of AI-generated "vintage" photos. I'm not referring to the obviously fake ones that are created for the sake of humor or artistic expression. If you come across a picture of Napoleon giving a TED Talk or Mata Hari taking a selfie with an iPhone, chances are you're not going to mistake it for a genuine historical photo. The real danger lies in those images that are crafted with the explicit intention of deceiving people — the ones that are so convincingly realistic that they could easily pass for authentic historical photographs.

And trust me, these generated images are getting scarily good. With advanced machine learning techniques and vast amounts of historical data to train on, these AI models can churn out "vintage" photos that are virtually indistinguishable from the real ones. They can mimic the grainy textures, the soft focus, and even the damages that I spend hours restoring and removing from the photographs that I work on.

Just to give you a concrete example of how pervasive this issue is becoming, a few days ago, I stumbled upon two Instagram pages that have started sharing these fake historical photos, passing them off as real to their thousands of followers, complete with fabricated captions and all that. The last time I checked, the most recent of these photos had over 5,000 likes. Now, granted, not every single one of those likes was necessarily from someone who was completely deceived. Some people might have just been scrolling through their feed and hit the heart button without really thinking about it. Others might have recognized the photo as fake but still appreciated it on an artistic level. But even if we're being conservative and assuming that only a fraction of those 5,000 people have truly believed in the authenticity of the image, that's still a significant number of individuals who have been exposed to a piece of misinformation masquerading as historical fact. And that's just one post on one platform. Multiply that by the countless other social media accounts, websites, and even publications that could potentially be spreading these images, and you start to see the scale of the problem. The potential impact of such posts cannot be overstated. The more these fake images circulate, the harder it becomes to separate fact from fiction. Each new post or share distorts the truth a little bit more, until we're left with a version of the past that bears little resemblance to reality.

But hey, maybe I'm just being overly dramatic. It's not like there are people out there who might want to, oh, I don't know, intentionally manipulate history to fit their own political agenda or anything. Right?

Many individuals defend the notion that we already can't be entirely certain about the accuracy of historical events as they are presented to us, and that the introduction of AI-generated photos doesn't fundamentally change this reality. They might point to examples of historical revisionism, or to the inherent subjectivity of historical narratives to suggest that our understanding of the past has always been shaped by various forces and agendas. Some might say that history has always been a contested space, subject to interpretation and manipulation by those in power. And to a certain extent, that's true. We know that historical narratives can be shaped by political, cultural, and social factors, and that our understanding of the past is always filtered through the lens of the present. But here's the thing: while traditional forms of historical distortion, like propaganda or revisionism, can certainly be influential, they still operate within certain bounds of plausibility. They might emphasize certain facts over others, or present events in a particular light, but they're still constrained by the available evidence and the limits of human credulity. AI-generated photos, on the other hand, have the potential to create entirely new realities out of nothing. They're not just tweaking or reinterpreting existing historical records - they're fabricating them from scratch. And when those fabrications are convincing enough, they can be incredibly difficult to detect or debunk.

Think about it this way: if you come across a piece of historical information, you can usually fact-check it against other sources or use your own critical thinking skills to assess its credibility. But if you're presented with a hyper-realistic AI-generated photo that seems to depict a historical scene or figure, how do you even begin to verify its authenticity? How do you know whether it's based on actual evidence or just some algorithm's imagination?

Honestly, just thinking about this is enough to make me want to curl up in a ball and rock back and forth.

In light of these risks, it is crucial that we recognize the unique challenges this situation presents to us and take proactive steps to mitigate their potential harm. I believe this will require a collective effort that includes developing new technologies and methodologies for detecting and authenticating images, and establishing clear ethical guidelines and standards for the use of AI.

Someone pointed out to me on Twitter that the EU has taken a significant step in this direction last week. The Artificial Intelligence Act, which is expected to take effect later this year, sets a global precedent for governments grappling with the regulation of rapidly evolving AI technology. Under these new guidelines, AI-generated deepfake content depicting existing people, places, or events must be clearly labeled as artificially manipulated.

Although the primary purpose of the Act is to ensure consumer safety, its provisions have far-reaching consequences for the use of AI in historical contexts, setting an important precedent for the development of ethical standards in this field.

As someone who has dedicated their career to preserving and sharing historical photographs, I've always been fascinated by the power of images to shape our understanding of the past. There's something incredibly compelling about the idea that a single photograph can capture a moment in time, freezing it for future generations to study and interpret. But the rise of AI-generated historical photos has thrown a wrench into this whole notion. We're now faced with the possibility of a world where the images we rely on as important assets to help us understand our past are no longer tethered to reality, but are instead the product of complex algorithms and machine learning.

But who knows, perhaps I'm being too pessimistic, sounding like this cranky old lady who's rambling on about how the youngsters these days are ruining everything with their fancy AI, or, even worse, like those people who saw my own work as this wild, dangerous, and crazy new thing. Maybe I'm just falling into the same trap, making catastrophic predictions and sweeping generalizations based on the limited evidence we have available right now.

After all, every new technology brings with it both risks and opportunities, and it's up to us as a society to figure out how to navigate that balance. Maybe the rise of AI-generated historical photos will actually end up deepening our appreciation for the real thing. Or maybe we'll just look back and wonder why we ever thought it was a good idea to let machines teach us about history.

Either way, it's sure to be a wild ride.

I'm curious to hear your thoughts, though. How do you think we can best navigate this issue? I'm looking forward to reading your comments below!

See you soon…

No law is going to force criminals or those spreading political propaganda to label their output as “AI-Generated”. And yet this is the most destructive misuse of AI.

This seems like a very real problem. We are all trying to learn how to not accept material as "real" until we verify whether it's AI generated or not-- but it wouldn't have crossed my mind to direct that skepticism at an "old" photo. Having looked at your examples, I see that we have to be skeptical across the board now, which is kind of sad.